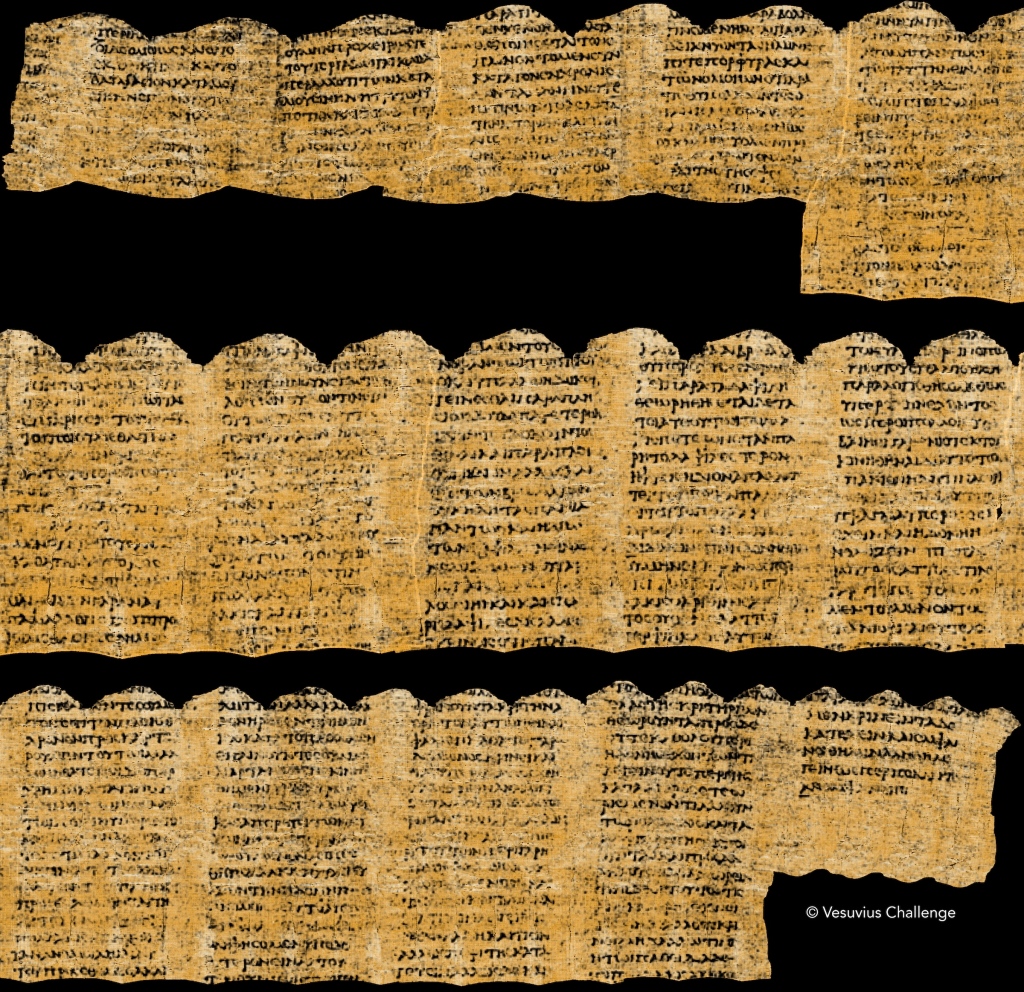

The exciting news is out and my team has won the Grand Prize of the Vesuvius Challenge. I am eternally grateful to being part of such an incredible community and having the chance to contribute to something so amazing. This work was built on the shoulders of giants, first and foremost prof. Seales and his team (I pretty much had Stephen’s Thesis open at all times), many amazing papers by FAIR and deepmind, and so many open contributions from the community.

In this post, I want to share the exciting details of my work on ink detection for the past couple of months.

From Kaggle to first letters

The kaggle ink detection focused on some small fragments for which we had a known ground truth for. The kaggle challenge had quite some interesting solutions, I spent a long time reading and trying out stuff from the top 10 winners, some of the techniques turned to only apply for the small kaggle dataset, while other continued to be a part of my final GP solution.

Here are the key takeaways from the kaggle competition that I found most interesting:

- the majority of the submissions used heavy augmentations 2d augmentations per channel (tiff slice)

- Resnet3d was used in 4 different solutions, segformer in another 2

- Translation invariance is a big performance booster, 4th place z-translation was one I used throughtout the whole challenge.

- The majority of solutions used test time augmentations (TTA), I only found this needed for the kaggle data though. For the first letters/GP I found that the model already handles different rotations and orientations well

- It is possible to operate on a lower resolution output, the second place winner mentioned that even size/32 works well. The majority of decoders and U-net architectures typically produce a size/4 map, and then upsample to initial resolution. I did most of the ink detection on size/4 output map, in the case of TimeSformer it was size/16 in order to maintain a smaller grid of predictions, more on that later.

Activity Recognition at a glance

The kaggle ink detection challenge sent me down the rabbit hole of learning more about activity recognition and 3d classification. Activity recognition typically deals with trying to classify a video sequence

I started from this paper about 3d resnets, which lead me to the authors’ work and their 3d-pytorch-resnet repository. I then went on to check the benchmarks they used, and a quick search on the Kinematics-700 benchmark landed me on TimeSformer (I am aware there are more recent architectures to date).

My key takeaways were as follows:

- The main question is how to treat temporal dimensions, most papers agree that it shouldn’t be the same as spatial dimensions

- Non-local blocks boost the performance of base 3d models, by merging global information into local context with some merging scheme (normal distribution, dot product, etc. )

- Two flow networks operate with 2 branches, kind of like seperate networks, one network goes really fast in time, one network goes slowly. Before the MLP layer you merge the representations from the 2 branches

- A lot of recent papers explore masked-autoencoder pretraining, which is an intersting idea given the amount of scroll data we have, but requires solving 2 main challenges:

- what kind of masking makes sense for ink data, tube masking might not be very benefecial, because ink is not contained in all depth slices, additionally.

- lots of compute and lots of testing. How big should the windows be, how many layers should be used

from first letters to ink banner

The key difference between my first letters model and my ink banner was based on the second takeaway. I added a non-local block to the I3D and switched from mean-pooling features to max-pooling. I noticed a slight improvement in performance immediatly, though I didn’t get to test them seperately. I ran only one experiment in the final days of the competition where I removed the non-local block, and I was not happy with the performance because some letters were missed.

The main training setup was unchanged between first letters and ink banner. The main key to generalization was using a small model like I3D, because from pre-first-letters to the ink banner models, there was not a lot of data for training the models, and Resnet3D-50 would overfit in a couple of epochs, despite not being so big itself. I3D delivers almost the same performance at less than 1/2 the number of parameters. Other factors for like the learning rate, label smoothing, data balance and heavy augmentations help generalization and prevent overfitting. Preventing overfitting helped the model go from decent results on a single segment to showing good results on multiple segments, and improving from one training round to the next.

Timesformer, the how and why

The idea behind using Timesformer was to seek a more efficient way to represent the 3d structures. My instincts were that 3d attention/convolution might be too brittle given the size of labeled data we have. My idea was to try to extract representations from 2d slices and then treat the representation as a sequence. The first attempt was a Conv-LSTM combination, it kind of worked but was quite wonky for the kaggle competition. A better model I came across was the Resnet (2+1)D, which simply does a spatial convolution then a temporal convolution. I was impressed with the results, but it was a lot slower than 3d convnets.

Timesformer is a intersting work in the field of 3d image classification. The paper presented an interesting premise of exploring the similar idea of divided space time modeling. They presented a study over various types of attention based 3d modeling, and found divided space-time attention to be the most performant. For spatio-temporal attention, the model performs (NxF+1) comparisons, N being the spatial sequence length and F being the temporal sequence length. Compare that with divided space time attention which only does (N+F+2) comparisons, it presents itself as a more efficient memory friendly and powerful option. I highly recommend reading the TimeSformer paper if you have time.

One thing remained a problem for TimeSformer, it didn’t work for scroll data, or at least so I thought. The majority of Kaggle ink detection used a U-net or even chained 2 U-nets together, TimeSformer is only an encoder so it’s only nature to pair it with a decoder, right?

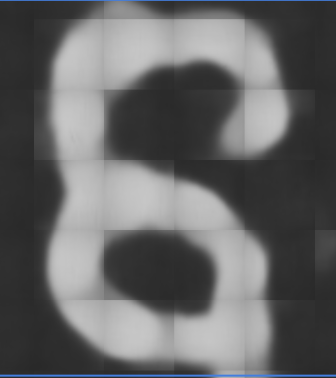

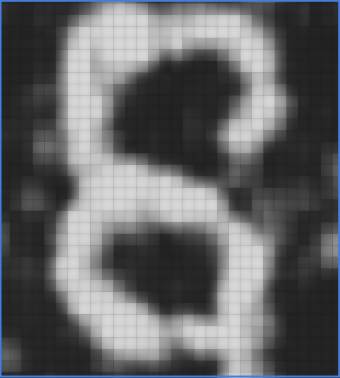

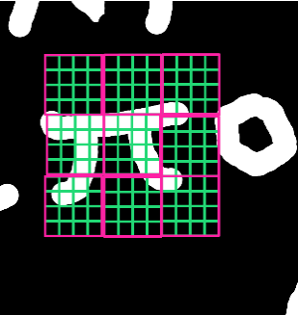

On a desperation attempt, I got rid of the decoder on the off chance it’s getting in the way of the TimeSformer. Transformers are often known to be sensitive to learning setup and hyperparameters. The idea is to use the TimeSformer as an encoder only model, and do a multiple output classification, then rearrange the predictions into a grid. To my surprise, this worked, and the results of TimeSformer were solid, it looked like quite an improvement, or at least, different.

Now, the TimeSformer produces a vector of 16 values, I rearrange them in a grid with torch.view. The input is 64×64 and the output is now 4×4. We can upsample the output or downsample the label map to 4×4. I went for the later option.

x=x.view(-1,1,4,4)

Window size selection

Window size selection was one of the rather controversial design points for the models, from a CS point of view, larger models produced cleaner, well cut predictions that seem to outperform smaller contexts, at least metrics wise. However, upon closer inspection, I noticed that smaller context models (64×64) had the upperhand over larger context models (256×256 and 512×512).

Due to the large context of the models, they are easier to bias for a couple of reasons.

Data is usually labeled with a fixed thickness brush, however the brush strokes are often not the same size, the thickness of the brush stroke varies from one part of the letter to the other. For the same label, 256×256 gets biased to output a fixed brush width around crackle, something that is much harder for 64×64 model to do due to limited context.

A less common bias, is letter completion/filling. The size of the dataset plays an important part in countering this, but during the early days of the first letters experiments, I noticed that 512×512 models might often get tempted to turn a C into an O

Finally, I often labeled my data using a combo of a 256×256 and a 64×64 models outputs. What I started to notice later on, was that the strokes of a 64×64 are reliable and easier to interpret than the 256×256 which gives an impression that everything is merging into one another.

Training details

Sampling

The way you sample training patches affects the density of ink labels and subsequently the luminosity and ink bias of the model. If the dataset is 10% ink and 90% negative space, the model would be biased to only output ink when it’s completely certain, and would become more conservative. If the data is more balanced, the more balanced, the model becomes unhinged, and the prediction image is a lot brighter. My idea was to sample windows only around the labeled letter, this way we minimize the chance of introducing false negatives from unlabeled regions while balancing the dataset with negative areas around the letters. For a 64×64 tile size, taking only 64×64 window around the letters results in a 50%/50% ink and brighter predictions. For better contrast, we can just sample 256×256 windows around ink, and chop them up into 64×64 windows. This is how TimeSformer is trained.

Data labeling

I did most of my labeling in photoshop-like software, namely photoshop online and affinity designer. The main purpose of the data labeling process is to transform the predictions of a model into a cleaner/better inklabel map to train a new model. In this video I try to show a short demo of how I did my labeling for the challenge, you can find the complete labeled dataset on github aswell.

Some considerations to keep in mind while labeling:

- Avoid scattering labelings as much as possible. Because we sample a window around the labeled ink strokes, if your labels are too scattered, they might introduce false negatives as well. I mostly tried to either label the entire line at once, or at least have it cutoff only on one side

- labeling only strong signal that is very well predicted by the model is unlikely to lead to performance gains, I noticed that most improvements come from labeling weak signals. You can experiment with labeling weak areas of signals and then checking if the signal improves after training.

Challenges of evaluating models

I found that using dice score/bce loss is useful to ensure that the model is learning and converging properly. However, dice and bce only measure what we know is there, the key problem is assessing what we do NOT know, e.g is the model finding more letters from one round to the next?

I found it more efficient comparing images for a certain segment where the signal is faint, and monitoring how the signal improves visually. I would typically load 2 predictions from 2 rounds, and try to find how much improvement there is. The imrpovement from one round to the next is often faint, but it adds up!

Outro and Future Ideas:

We spent a lot of time trying to figure out where the lost letters are. We tried to make sure that segmentation and ink detection are not missing out on some signals. Luke spent quite some time manually resegmenting a couple of the large segments, as well as creating new segments from those. In the end our consensus was that there is a small loss in the segmentation, but not enough for it to be the game changer. The segmentation team already did an incredible job with their segmentation speed and accuracy (Kudos to Ben,David and Konrad). There are also some areas that are very well segmented, yet the predictions do not show anything. That leaves out either possible damage to the scroll itself, or some signal that needs to be reinforced/enhanced in the ink detection.

I am sure both segmentation and ink detection are going to improve a lot in 2024. The most exciting and surprising news while working with Julian’s Thaumato segmentations, was that a quick segmentation of the outer most sheet of the scroll was showing signs of life! I thought that as the most exposed sheet it might be too damaged, but to my surprise some letters were visible!

Future ideas:

- Scroll pretraining, pretraining is kind of the main drive behind the best activity recognition models for the past couple of years, we also potentially have a ton of data for pretraining, the main challenge of course is the size of the model/data required for good pretrained models. Pretraining often benefits from larger architectures and as much data as possible. One idea that I think is most exciting is I-JEPA, switching 2d ViT with a TimeSformer, unfortunately I didn’t get time to test this out.

- Labeling was mostly done manually, and this has some speed limitations. In the end, a lot of the models predictions were not used in training. It would also be interesting to refine the inklabels with the papyrologists annotations. Scaling up the models and using as much data as we possibly have right now would help reveal whether ink detection has saturated or not.

- An interesting idea from Julian is to apply the gaussian weighting to the training loss aswell, so the model focuses on the signal it can see, rather than the signal it has no context for.

The code base for our submission can be found here:

ink detection code: https://github.com/younader/Vesuvius-Grandprize-Winner

automatic segmentation code: https://github.com/schillij95/ThaumatoAnakalyptor

Feel free to reach with questions, suggestions of ideas to cover or dive deep into.

Thank you for tuning in for my journey with the Vesuvius Challenge.

In a way, it feels like building on my ancestors’ innovative spirit from 5,000 years ago with their invention of Papyrus sheets. By bringing an ancient scroll back from the ashes, we continue to celebrate and document the incredible story of human ingenuity.

Leave a comment